Scholars have been researching the effects and causes of political polarization, and peer pressure on belief formation and internalization (or rejection) of evidence, on the relationship between identity, social support, emotion, and a sense of what is real (or not) over at least 70 years. “When Prophecy Fails”a seminal work on the area, was published in 1956. And the study of the influence of social networks on all this is, of course, more recent – because the networks themselves are a relatively recent phenomenon: Facebook completes two decades in the next year, and Twitter from 2006.

Those who work to combat misinformation and, more generally, to spread correct information in conflicting contexts – such as pseudoscientific controversies, for example about global warming or the safety of vaccines – and are interested in keeping up with research in this area. A great effort not only to get acquainted with the classics and master the basic concepts of the field, but also to keep up with the recent literature and, most importantly, try to extract some practical meaning and direction from the experimental results: after all, to what extent the effects have been detected in the laboratory, in simulations or Through research questionnaires that reflect how people think and interact “in nature”?

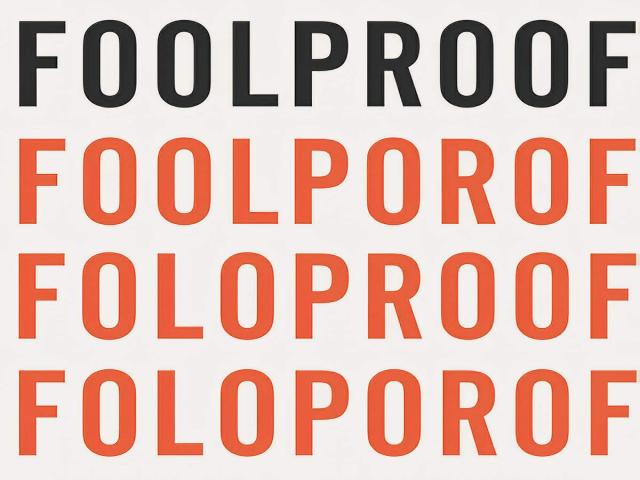

To the delight of everyone (or, at least, me), that effort has been significantly reduced in the past month: Guaranteed (“À Prova de Idiotas,” in my free translation), a book by social psychologist Sander van der Linden, of the University of Cambridge. It’s kind of a “brief history of time” of disinformation research: Like Stephen Hawking’s work on cosmology, it’s an accessible and entertaining book, authored by one of the field’s leading authorities, that presents and explains the field – not just classic results, but advanced ones as well. For the inexperienced citizen.

good news

A few years ago, what I refer to as the field of science communication in conflicting contexts (for convenience, we’ll abbreviate it as “5C”) went through what can only be defined as a gothic phase: empirical findings that established the presence and resilience of phenomena such as the “rebound effect” (or “influence”). reverse”) and motivated logic has convinced many people that doing 5C is impossible, a waste of time, a sign of arrogance, and, in essence, counterproductive.

But what are these phenomena? “Reversion” occurs when exposure to correct information causes the denial not to re-evaluate their beliefs, but to cling to them more intensely, becoming extremists; Motivational reasoning occurs when the denier draws on his own cognitive resources in order to devise reasons to invalidate the contrary evidence presented.

Although the findings recording both influences were limited to specific contexts, they were sufficient to generate a movement of Gothic nihilism or, as Natalia Pasternak and I have identified elsewhere, “Odara”who advocated that the best public science communication could do was comment on how amazing dinosaur feathers were and maybe that, who knows, at some point, would get some strays back on track.

On this, Van der Linden brings great (or bad, in the case of goths who love frayed bangs and strong shadow around the eyes) great news: Backfires and motivating logic exist, but no more rare than nihilistic propaganda has suggested. Most people are able to recognize a valid correction of their beliefs and change their mind. Quoting the author directly: “‘Polarizing beliefs’ or ‘rebound effects’ are much rarer than first thought, and even when people are motivated, there is a limit (…) people eventually succumb to their repeated corrections of beliefs.”

Bad News

But, as usual, there is a problem: stimulating thinking is activated and counterproductive and can be exacerbated by conditions of the emotional, social and cultural environment. Polarization is one of the main motivators. There are networks and social networking sites.

This is another point where the scientific literature, if read in pieces, seems to contain contradictory findings, which Van der Linden’s book helps put into perspective: the role of networks like Twitter and Facebook in creating the conditions that lead to social hate. division, and thus misinformation and denial. There are two “naive” models about this: one is proposing those networks a reason These evils are another, that they only certificate.

The first says that fractures in real society result from behavior in networks. The second is that behavior on networks only crosses, or makes visible, real fractions, which already existed before the Internet. In general, research by independent scientists tends to come to the first conclusion and studies funded by networks (such as a famous paper Posted in Sciences) lean toward the second.

The overall balance offered by “Foolproof” balances the two premises, but with a grim focus: Networks may not create divisions, many of which, in fact, are already lurking in the offline world, but they certainly stimulate, encourage, and reward extremism, polarization, and hostility. In short, network dynamics accelerate—if not cause—the transformation of disagreement into hostility, divergence into hatred, and pushing enemies into opposite corners until they become enemies.

Van der Linden is particularly critical of platforms such as Facebook’s use of the argument that polarization and hostility are “bad for business,” so there is no incentive for algorithms to radicalize users. The psychologist writes that this is not what numbers and science have shown: negative and hostile content is what generates greater engagement, and is the metric most requested by networks.

“Do social media algorithms really provide gateways to disinformation, polarization, and extremism?” , asks the researcher, to then reply: “We’ve barely begun to touch the surface of the problem—but the results are troubling.” He cites a study of 50,000 Americans that showed that when people surf the web alone, their “diet” becomes less polarizing and radical than when they follow links offered on social networks.

sandwich

The book is structured so that each chapter ends with a series of practical suggestions–based on the science presented–on how to combat misinformation. The general conclusion is that taking precautions (through safety strategies) “inoculation” or “pre-inoculation”) is better than remedy: the correction of an incorrect fact always competes, in the deceived person’s mind, with the earlier statement of error. Also, when it is necessary to make a denial, repair, or correction, it is best to structure the message in order to escape the emotional triggers that lead to polarization.

‘Foolproof’ provides an outline and description of the ‘truth sandwich’, a text structure (also works with video scripts and other media) that is the most effective form of disinformation – at least, according to the science available to date. There is a good step-by-step explanation of the “sandwich” in Demystification guideWhich I highly recommend reading.

In short, the “sandwich” style advocates that facts should dominate the narrative – the first and last thing the reader (or viewer or listener) should find in the content is the truth of the subject. The truth is the “bread” of the sandwich. “The Flesh,” in turn, consists of the myth being disproved—making it clear that it is a myth—and an explanation of how we know the claim is false, and a dissection of the dishonest maneuvers used to make it seem plausible.

Writing in “sandwich” isn’t very intuitive – the most natural thing for most people is to start with the legend, and from a logical chain of arguments we conclude with the truth – but psychologically this is a risky approach. The inattentive reader, or one who stops halfway through, will be left with a reaffirmation of the legend in his head and only a vague idea of the errors involved.

Serum

The last third of the work is devoted to specific research by van der Linden and colleagues on the use of “vaccination” or “vaccination” strategies to counter the social effects of disinformation and psychological manipulation. The general concept, supported by empirical evidence, is that people exposed to “weakened” versions of lies or manipulative techniques acquire some measurable resistance to becoming victims of those lies or techniques in the real world.

It’s worth checking a bit what “diluted” means in this context – remember that with a “sandwich” scheme it’s generally a good idea to give a clue to the myth. Mitigation occurs when a falsehood or fallacy is presented in a restrictive, instructive, and didactic manner, preceded by a warning that it is harmful content or a hoax, and if possible in a humorous and satirical context. Van der Linden describes a hypothetical game of “pollination” against political polarization in which the basis of contention is the use of pineapples in pizza, and another against the upbringing of young people by radical groups that supposedly seek to recruit terrorists for the Liberation Front against Ice (intended for the “reason” of the bombing polar ice caps).

Under these conditions, vaccinated subjects show an improvement — the magnitude of which can vary greatly depending on the context, the technology used, the media adopted, the subject of the disinformation, among other factors — in the ability to detect and reject lies and manipulation. As with many biological vaccines, these gains, in order to be maintained, require periodic booster doses.

And since I mentioned biological vaccines, it’s fair to add that a growing number of researchers in fields such as immunology and microbiology are concerned that the analogy between psychological pre-prognosis and the physiological immune system is being crossed a bit: the analogy must be that you’re suddenly so luminous that you literally end up , or worse, key concepts of the chosen area end up being distorted to better serve the metaphor (none of which is a problem with “Foolproof” by the way).

As you already noticed in other writingsHowever, the bridge between illustrative metaphor and pseudoscientific delusion is short and easy to cross, even more so when the concepts involved have picked up on popular culture and are seen as “sexy”. It would be unfortunate if a hitherto fruitful and promising area ended up being lost along the way.

Carlos Orci is a journalist and editor-in-chief of Revista Questão de Ciência, author of “O Livro dos Milagres” (Editora da Unesp), “O Livro da Astrologia” (KDP), and “Negacionismo” (Editora de Cultura) and co-author of “Pure Picaretagem” (Leya ), “Science in Daily Life” (Editora Contexto), the work that won the Jabuti Prize, and “Against Reality” (Papirus 7 Mares)

“Hardcore beer fanatic. Falls down a lot. Professional coffee fan. Music ninja.”

More Stories

Sleeping with a fan can cause this health problem

What do snowflakes reveal about the universe?

Al-Nahj News – The new director of Assis Regional Hospital talks about his career